Defining objectives and scopeAssembling a teamThreat modelingAddressing the entire application stackDebriefing, post-engagement analysis, and continuous improvementGenerative AI red teaming complements traditional red teaming by focusing on the nuanced and complex aspects of AI-driven systems including accounting for new testing dimensions such as AI-specific threat modeling, model reconnaissance, prompt injection, guardrail bypass, and more. AI red-teaming scope: Generative AI Red Teaming builds on traditional red teaming by covering unique aspects of generative AI, such as models, model output, and the output and responses from models. Generative AI red teams should examine how models can be manipulated to produce misleading and false outputs or “jailbroken,” allowing them to operate in ways that weren’t intended.Teams should also determine if data leakage can occur, all of which are key risks consumers of generative AI should be concerned with. OWASP recommends that testing considers both the adversarial perspective and that of the impacted user.Leveraging NIST’s AI RMF generative AI Profile, OWASP’s guide recommends structuring AI Red Teaming to consider the lifecycle phases (e.g., design, development, etc.), the scope of risks such as model, infrastructure, and ecosystem, and the source of the risks. Risks addressed by generative AI red teaming: As we have discussed, generative AI presents some unique risks, including model manipulation and poisoning, bias, and hallucinations, among many others, as depicted in the image above. For these reasons, OWASP recommends a comprehensive approach that has four key aspects:

Model evaluationImplementation testingSystem evaluationRuntime analysisThese risks are looked at from three perspectives as well: security (operator), safety (users), and trust (users). OWASP categorizes these risks into three key areas:

Security, privacy, and robustness riskToxicity, harmful context, and interaction riskBias, content integrity, and misinformation riskAgentic AI, in particular, has received tremendous attention from the industry, with leading investment firms such as Sequoia calling 2025 “the year of Agentic AI.” OWASP specifically points out multi-agent risks such as multi-step attack chains across agents, exploitation of tool integrations, and permission bypass through agent interactions. To provide more detail, OWASP recently produced its “Agentic AI”, Threats and Mitigations” publication, including a multi-agent system threat model summary.

Threat modeling for generative AI/LLM systems: OWASP recommends threat modeling as a key activity for generative AI Red Teaming and cites MITRE ATLAS as a great resource to reference. Threat modeling is done to systematically analyze the system’s attack surface and identify potential risks and attack vectors.Key considerations include the model’s architecture, data flows, and how the system interacts with the broader environment, external systems, data, and sociotechnical aspects such as users and behavior. OWASP, however, points out that AI and ML present unique challenges because models may behave unpredictably because they are non-deterministic and probabilistic. Generative AI red-teaming strategy: Each organization’s generative AI red teaming strategy may look different. OWASP explains that the strategy must be aligned with the organization’s objectives, which may include unique aspects such as responsible AI goals and technical considerations.

b2b-contenthub.com/wp-content/uploads/2025/03/Red-team-strategies.jpg?resize=290%2C300&quality=50&strip=all 290w, b2b-contenthub.com/wp-content/uploads/2025/03/Red-team-strategies.jpg?resize=163%2C168&quality=50&strip=all 163w, b2b-contenthub.com/wp-content/uploads/2025/03/Red-team-strategies.jpg?resize=81%2C84&quality=50&strip=all 81w, b2b-contenthub.com/wp-content/uploads/2025/03/Red-team-strategies.jpg?resize=242%2C250&quality=50&strip=all 242w” width=”301″ height=”311″ sizes=”(max-width: 301px) 100vw, 301px” />

b2b-contenthub.com/wp-content/uploads/2025/03/Red-team-strategies.jpg?resize=290%2C300&quality=50&strip=all 290w, b2b-contenthub.com/wp-content/uploads/2025/03/Red-team-strategies.jpg?resize=163%2C168&quality=50&strip=all 163w, b2b-contenthub.com/wp-content/uploads/2025/03/Red-team-strategies.jpg?resize=81%2C84&quality=50&strip=all 81w, b2b-contenthub.com/wp-content/uploads/2025/03/Red-team-strategies.jpg?resize=242%2C250&quality=50&strip=all 242w” width=”301″ height=”311″ sizes=”(max-width: 301px) 100vw, 301px” />OWASPGenerative AI red teaming strategies should consider various aspects as laid out in the above image, such as risk-based scoping, engaging cross-functional teams, setting clear objectives, and producing both informative and actionable reporting.

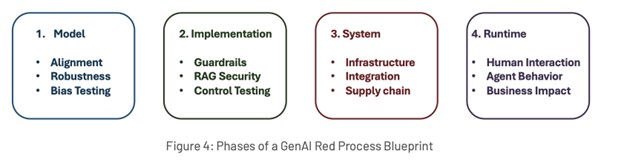

Blueprint for generative AI red teaming: Once a strategy is in place, organizations can create a blueprint for conducting generative AI red teaming. This blueprint provides a structured approach and the exercise’s specific steps, techniques, and objectives.OWASP recommends evaluating generative AI systems in phases, including models, implementation, systems, and runtime, as seen below:

b2b-contenthub.com/wp-content/uploads/2025/03/Phases-of-red-team-blueprint.jpg?resize=300%2C77&quality=50&strip=all 300w, b2b-contenthub.com/wp-content/uploads/2025/03/Phases-of-red-team-blueprint.jpg?resize=150%2C38&quality=50&strip=all 150w, b2b-contenthub.com/wp-content/uploads/2025/03/Phases-of-red-team-blueprint.jpg?resize=444%2C114&quality=50&strip=all 444w” width=”624″ height=”160″ sizes=”(max-width: 624px) 100vw, 624px” />

b2b-contenthub.com/wp-content/uploads/2025/03/Phases-of-red-team-blueprint.jpg?resize=300%2C77&quality=50&strip=all 300w, b2b-contenthub.com/wp-content/uploads/2025/03/Phases-of-red-team-blueprint.jpg?resize=150%2C38&quality=50&strip=all 150w, b2b-contenthub.com/wp-content/uploads/2025/03/Phases-of-red-team-blueprint.jpg?resize=444%2C114&quality=50&strip=all 444w” width=”624″ height=”160″ sizes=”(max-width: 624px) 100vw, 624px” />OWASPEach of these phases has key considerations, such as the model’s provenance and data pipelines, testing guardrails that are in place for implementation, examining the deployed systems for exploitable components, and targeting runtime business processes for potential failures or vulnerabilities in how multiple AI components interact at runtime in production.This phased approach allows for efficient risk identification, implementing a multi-layered defense, optimizing resources, and pursuing continuous improvement. Tools should also be used for model evaluation to support speed of evaluation, efficient risk detection, consistency, and comprehensive analysis. The complete OWASP generative AI Red Teaming guide provides a detailed checklist for each blueprint phase, which can be referenced. Essential techniques: While there are many possible techniques for generative AI Red Teaming, it can feel overwhelming to determine what to include or where to begin. OWASP does, however provide what they deem to be “essential” techniques.These include examples such as:

Adversarial Prompt EngineeringDataset Generation ManipulationTracking Multi-Turn AttacksSecurity Boundary TestingAgentic Tooling/Plugin AnalysisOrganizational Detection & Response CapabilitiesThis is just a subset of the essential techniques, and the list they provide represents a combination of technical considerations and operational organizational activities. Maturing an AI-related red team: As with traditional red teaming, generative AI red teaming is an evolving and iterative process in which teams and organizations can and should mature their approach both in tooling and in practice.Due to AI’s complex nature and its ability to integrate with several areas of the organization, users, data, and more, OWASP stresses the need to collaborate with multiple stakeholder groups across the organization, conduct regular synchronization meetings, have clearly defined processes for sharing findings, and integrate existing organizational risk frameworks and controls.The team conducting generative AI red teaming should also evolve to add additional expertise as needed to ensure relevant skills evolve alongside the rapidly changing nature of the generative AI technology landscape.

Best practices: The OWASP generative AI red teaming guide closes out by listing some key best practices organizations should consider more broadly. These include examples such as establishing generative AI policies, standards, and procedures and establishing clear objectives for each red-teaming session.It is also essential for organizations to have clearly defined and meaningful success criteria to maintain detailed documentation of test procedures, findings, and mitigations and to curate a knowledge base for future generative AI red-teaming activities.

First seen on csoonline.com

Jump to article: www.csoonline.com/article/3844225/how-owasps-guide-to-generative-ai-red-teaming-can-help-teams-build-a-proactive-approach.html

![]()